Accelerate Machine Learning with Hogwild! Gradient Descent

Boost performance and efficiency with parallel computing techniques for modern machine learning.

Introduction

Optimization is at the heart of machine learning and computational mathematics. Among the many optimization techniques, gradient descent is particularly notable for its flexibility and effectiveness in large-scale problems. However, taking full advantage of modern multicore architectures can be tricky due to the memory synchronization overhead inherent in parallelizing gradient descent. The Hogwild! algorithm provides a lock-free method for parallelizing stochastic gradient descent (SGD), delivering near-linear speedups under specific sparsity assumptions.

This article explores the mathematical basis of gradients and gradient descent, examines the hurdles of parallelizing SGD, and offers a detailed explanation of Hogwild!

Gradients and Gradient Descent

The Gradient: A Mathematical Refresher

A gradient is the vector of partial derivatives of a multivariate function, pointing in the direction of steepest ascent. For a function \( f : \mathbb{R}^n \to \mathbb{R} \), the gradient is:

\[ \nabla f(x) = \begin{bmatrix} \frac{\partial f}{\partial x_1} \\ \frac{\partial f}{\partial x_2} \\ \vdots \\ \frac{\partial f}{\partial x_n} \end{bmatrix}, \]

where \( \frac{\partial f}{\partial x_i} \) is the partial derivative of \( f \) with respect to the \( i \)-th variable.

Gradient Descent: Iterative Optimization

Gradient descent minimizes a differentiable function through iterative updates. Starting with an initial point \( x_0 \), each iteration follows:

\[ x_{k+1} = x_k - \eta \nabla f(x_k), \]

where \( \eta > 0 \) is the learning rate (step size). Choosing \( \eta \) properly ensures convergence, often assuming that \( f(x) \) is convex and has a Lipschitz-continuous gradient:

-

Convexity

\[ f(x') \geq f(x) + \nabla f(x)^\top (x' - x), \quad \forall x, x' \in \mathbb{R}^n. \] -

Lipschitz Continuity

\[ |\nabla f(x') - \nabla f(x)| \leq L |x' - x|, \quad \forall x, x' \in \mathbb{R}^n. \]

Stochastic Gradient Descent (SGD)

For many machine learning tasks where \( f(x) \) is decomposed into terms for individual data points, stochastic gradient descent is essential. Rather than computing the gradient on the full dataset, it uses a random mini-batch (or single data point) at each step:

\[ x_{k+1} = x_k - \eta \nabla f_i(x_k), \]

where \( f_i \) is the cost corresponding to a randomly selected data point \( i \). This stochastic approach lowers computational expense and improves scalability.

Challenges in Parallelizing SGD

Sequential Nature of SGD

SGD updates are sequential by design: each step depends on the current state of the model parameters. To parallelize SGD, the data is split across multiple processors, but this leads to synchronization issues as they all access the same shared memory.

Synchronization Overhead

Traditional parallel SGD often relies on locking, which prevents concurrent writes to shared parameters. Unfortunately, locking can become a major bottleneck in multicore environments. The ideal solution is to reduce or eliminate such synchronization costs.

The Hogwild! Algorithm: A Lock-Free Paradigm

Hogwild! is a lock-free algorithm specifically devised to parallelize SGD by leveraging the sparsity of many real-world problems. Its distinguishing feature is allowing multiple processors to update shared parameters asynchronously, tolerating occasional overwrites. This strategy works particularly well when each update modifies only a small subset of parameters.

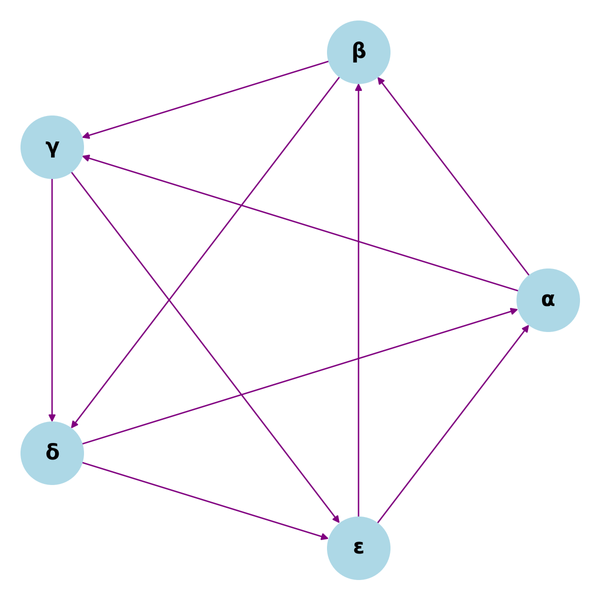

Problem Setup

Hogwild! targets optimization problems of the form:

\[ \min_{x \in \mathbb{R}^n} f(x) = \sum_{e \in E} f_e(x_e), \]

where \( E \) is a collection of subsets of \( {1,2,\ldots,n} \), and \( x_e \) refers to the parameters indexed by \( e \). The function \( f \) is said to be sparse if each \( f_e \) depends on only a small group of parameters.

Algorithm Steps

Each processor runs the following steps independently:

- Randomly select an edge \( e \in E \).

- Calculate the gradient \( G_e(x) = \nabla f_e(x_e) \).

- Update the relevant parameters:

\[ x_v \gets x_v - \eta , G_v(x), \quad \forall v \in e. \]

No locking is used, so these updates to shared memory can happen concurrently. In sparse scenarios, collisions are rare and the associated “noise” doesn’t significantly degrade convergence.

Sparsity Considerations

Hogwild!’s success hinges on certain measures of sparsity:

- Edge Size (\(\Omega\)): The maximum number of parameters any gradient depends on.

- Density (\(\Delta\)): The proportion of parameters involved in each update.

- Overlap (\(\rho\)): The fraction of updates that might interfere with each other.

Mathematical Analysis of Hogwild!

Convergence Assumptions

Hogwild! converges under standard convexity and Lipschitz conditions, supplemented by sparsity:

-

Strong Convexity

\[ f(x') \ge f(x) + \nabla f(x)^\top (x' - x) + \frac{c}{2}|x' - x|^2, \quad \forall x, x'. \] -

Bounded Gradients

\[ |\nabla f_e(x_e)| \le M, \quad \forall e \in E, ; x \in \mathbb{R}^n. \]

Convergence Rate

Because of the asynchronous nature, updates may be delayed by up to \(\tau\). The expected suboptimality after \( k \) steps satisfies:

\[ \mathbb{E}[f(x_k) - f^*] \le \frac{C}{k}, \]

where \( C \) depends on factors like \(\Omega, \rho, \Delta\), and \(\tau\).

Near-Linear Speedup

For sparse problems where \(\tau = o(n^{1/4})\), Hogwild! can deliver almost linear scaling with the number of processors \(p\). Collisions remain infrequent enough that they don’t undermine convergence speed.

Practical Applications and Experiments

Sample Use Cases

-

Sparse SVM

Text classification tasks (like RCV1) exhibit roughly 3x speedups over serial SGD. -

Matrix Completion

Collaborative filtering for large-scale datasets (e.g., Netflix Prize) benefits from Hogwild!’s parallel lock-free updates. -

Graph Cuts

Image segmentation problems can scale nearly linearly with the number of cores.

Empirical Outcomes

Benchmarks consistently show Hogwild! outperforming lock-based methods, sometimes by an order of magnitude. These gains are most prominent in highly sparse tasks where gradient evaluations are relatively cheap.

Pros and Cons of Hogwild!

Advantages

- Lock-Free Operation

Avoids synchronization overhead, enabling faster iteration. - Excellent Scalability

Close to linear speedup under sparse conditions. - Straightforward Implementation

No intricate coordination or locking schemes required.

Drawbacks

- Limited to Sparse Problems

Dense updates cause frequent write conflicts, reducing effectiveness. - Hardware Dependencies

Performance can rely on efficient atomic operations supported by the system.

Final Thoughts

Hogwild! is a breakthrough method for parallelizing SGD in a lock-free way, capitalizing on problem sparsity to achieve substantial speedups with minimal accuracy penalties. While it shines in sparse settings, ongoing research explores ways to extend its benefits to less sparse problems and various optimization frameworks.

Future developments may include adaptive collision avoidance and selective update ordering to further limit memory contention. Overall, Hogwild! exemplifies how combining theoretical insights with practical design can unlock new frontiers in efficient, scalable computation.

Recht, B., Re, C., Wright, S., & Niu, F. (2011). Hogwild: A lock-free approach to parallelizing stochastic gradient descent. Retrieved from https://people.eecs.berkeley.edu/~brecht/papers/hogwildTR.pdf