Building Nanograd: A Deep Dive into Automatic Differentiation

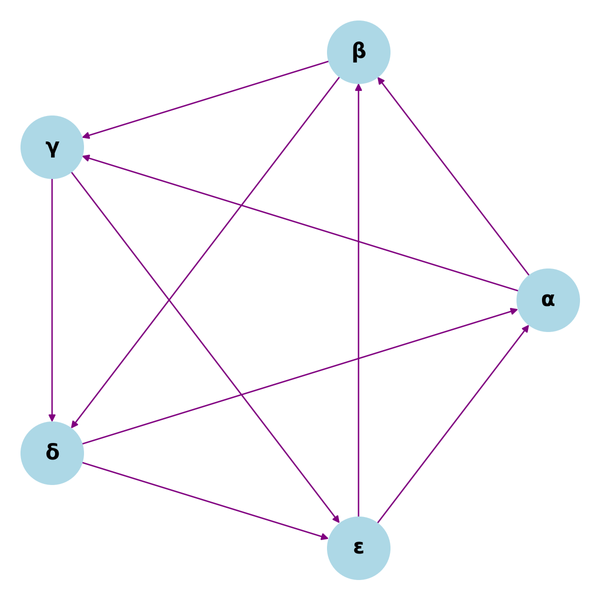

From First Principles to a Minimal Autograd Engine 1. Introduction & Motivation Nanograd is a minimal automatic differentiation (autograd) engine that computes gradients for scalar-valued functions. Popularized by Andrej Karpathy, it demonstrates the core mechanics behind frameworks like PyTorch and TensorFlow. This guide constructs Nanograd from scratch, explaining: 1. Automatic