Building Nanograd: A Deep Dive into Automatic Differentiation

From First Principles to a Minimal Autograd Engine

1. Introduction & Motivation

Nanograd is a minimal automatic differentiation (autograd) engine that computes gradients for scalar-valued functions. Popularized by Andrej Karpathy, it demonstrates the core mechanics behind frameworks like PyTorch and TensorFlow. This guide constructs Nanograd from scratch, explaining:

- Automatic Differentiation (AD): How gradients are computed via backpropagation.

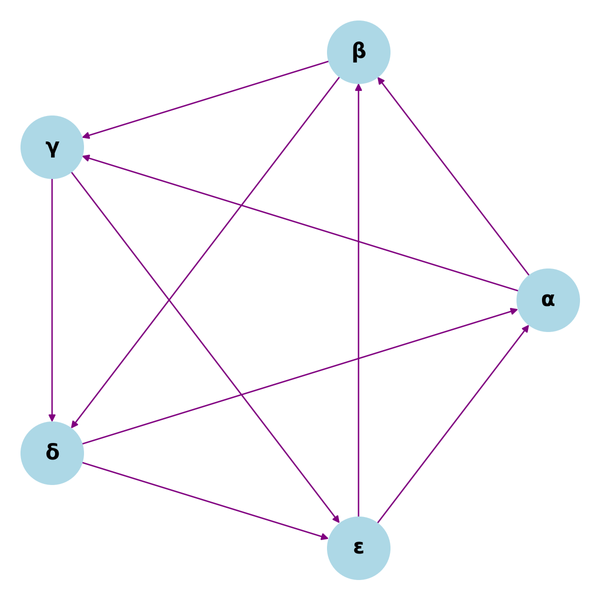

- Computational Graphs: Representing mathematical operations as directed acyclic graphs (DAGs).

- Dynamic Graph Construction: Tracking operations during forward passes.

2. Foundations of Automatic Differentiation

2.1 What is a Gradient?

For a function \( f: \mathbb{R}^n \rightarrow \mathbb{R} \), the gradient \( \nabla f \) is:

\[ \nabla f = \left[ \frac{\partial f}{\partial x_1}, \frac{\partial f}{\partial x_2}, ..., \frac{\partial f}{\partial x_n} \right]^T \]

Gradients indicate the direction of steepest ascent in \( f \).

2.2 Forward vs. Reverse Mode AD

- Forward Mode: Computes directional derivatives by iterating from inputs to outputs.

- Complexity: \( O(n) \) per output.

- Reverse Mode (Backpropagation): Computes gradients by traversing from outputs to inputs.

- Complexity: \( O(m) \) per input (favored in deep learning).

2.3 Chain Rule in Backpropagation

For a composite function \( f(g(x)) \), the chain rule states:

\[ \frac{df}{dx} = \frac{df}{dg} \cdot \frac{dg}{dx} \]

Reverse-mode AD recursively applies this rule through the computational graph.

3. Core Components of Nanograd

3.1 The Value Class

A Value object wraps a scalar and tracks its computational history:

class Value:

def __init__(self, data, _children=(), _op=''):

self.data = data # Scalar value

self.grad = 0.0 # Gradient (initially 0)

self._backward = lambda: None # Backward function

self._prev = set(_children) # Child nodes

self._op = _op # Operation label (for debugging)3.2 Computational Graph Representation

Each Value object is a node in a DAG. For an expression \( a + b \), the graph is:

+

/ \\

a b3.3 Gradient Initialization

- All grad attributes are initialized to 0.

- The output node’s gradient is set to 1.0 before backpropagation (since \( \frac{df}{df} = 1 \)).

4. Mathematical Operations & Their Gradients

4.1 Addition

def __add__(self, other):

other = other if isinstance(other, Value) else Value(other)

out = Value(self.data + other.data, (self, other), '+')

def _backward():

self.grad += 1.0 * out.grad # ∂(a+b)/∂a = 1

other.grad += 1.0 * out.grad # ∂(a+b)/∂b = 1

out._backward = _backward

return outGradient Derivation:

\[ \frac{\partial (a + b)}{\partial a} = 1, \quad \frac{\partial (a + b)}{\partial b} = 1 \]

4.2 Multiplication

def __mul__(self, other):

other = other if isinstance(other, Value) else Value(other)

out = Value(self.data * other.data, (self, other), '*')

def _backward():

self.grad += other.data * out.grad # ∂(a*b)/∂a = b

other.grad += self.data * out.grad # ∂(a*b)/∂b = a

out._backward = _backward

return outGradient Derivation:

\[ \frac{\partial (a \cdot b)}{\partial a} = b, \quad \frac{\partial (a \cdot b)}{\partial b} = a \]

4.3 Activation Functions (Tanh)

def tanh(self):

x = self.data

t = (math.exp(2*x) - 1)/(math.exp(2*x) + 1)

out = Value(t, (self,), 'tanh')

def _backward():

self.grad += (1 - t**2) * out.grad # ∂tanh(x)/∂x = 1 - tanh²(x)

out._backward = _backward

return outGradient Derivation:

\[ \frac{\partial \tanh(x)}{\partial x} = 1 - \tanh^2(x) \]

5. Backpropagation Algorithm

5.1 Topological Sorting

To apply the chain rule correctly, nodes must be processed in reverse topological order:

- Forward Pass: Build the computational graph.

- Backward Pass:

- Initialize output gradient to 1.0.

- Sort nodes topologically (parents before children).

- Apply

_backward()in reverse order.

5.2 Implementing backward()

def backward(self):

topo = []

visited = set()

def build_topo(v):

if v not in visited:

visited.add(v)

for child in v._prev:

build_topo(child)

topo.append(v)

build_topo(self)

self.grad = 1.0

for v in reversed(topo):

v._backward()6. From Scratch Implementation

6.1 Full Value Class

import math

class Value:

def __init__(self, data, _children=(), _op=''):

self.data = data

self.grad = 0.0

self._backward = lambda: None

self._prev = set(_children)

self._op = _op

def __add__(self, other):

# ... (as defined in Section 4.1)

def __mul__(self, other):

# ... (as defined in Section 4.2)

def tanh(self):

# ... (as defined in Section 4.3)

def __repr__(self):

return f"Value(data={self.data}, grad={self.grad})"6.2 Testing the Engine

Example 1: Simple Expression

a = Value(2.0)

b = Value(3.0)

c = a * b + a.tanh()

c.backward()

print(a.grad) # ∂c/∂a = b + (1 - tanh²(a)) ≈ 3 + (1 - 0.964) ≈ 3.036Example 2: Neural Network Neuron

# Inputs

x1 = Value(0.5)

x2 = Value(-1.0)

w1 = Value(1.0)

w2 = Value(1.0)

b = Value(0.1)

# Forward pass

out = (x1 * w1 + x2 * w2 + b).tanh()

out.backward()

print(w1.grad) # ∂out/∂w1 = (1 - tanh²(z)) * x1 ≈ (0.75) * 0.5 = 0.3757. Advanced Topics

7.1 Efficiency Considerations

- Scalar Limitation: Nanograd handles scalars, but real frameworks use tensors for vectorization.

- Operator Overloading: PyTorch uses C++ extensions for speed; Python is ~100x slower.

7.2 Extending Nanograd

- More Operations: Add

exp,log, orReLU. - GPU Support: Implement tensor operations with CUDA.

- Gradient Checkpointing: Reduce memory usage by recomputing intermediates.

7.3 Comparison with PyTorch Autograd

8. Mathematical Proofs

8.1 Chain Rule for Composite Functions

For \( f(g(x)) \), the derivative is:

\[ \frac{df}{dx} = \frac{df}{dg} \cdot \frac{dg}{dx} \]

Proof:

By definition,

\[ \frac{df}{dx} = \lim_{h \to 0} \frac{f(g(x+h)) - f(g(x))}{h} \]

Multiply and divide by \( g(x+h) - g(x) \):

\[ \frac{df}{dx} = \lim_{h \to 0} \left[ \frac{f(g(x+h)) - f(g(x))}{g(x+h)-g(x)} \cdot \frac{g(x+h)-g(x)}{h} \right] = \frac{df}{dg} \cdot \frac{dg}{dx} \]

9. Applications

9.1 Training a Neural Network

# 2-layer neural network

class Neuron:

def __init__(self, n_inputs):

self.w = [Value(np.random.randn()) for _ in range(n_inputs)]

self.b = Value(np.random.randn())

def __call__(self, x):

act = sum((xi * wi for xi, wi in zip(x, self.w)), self.b)

return act.tanh()

# Loss function: Mean Squared Error (MSE)

def loss(y_pred, y_true):

return sum((yp - yt)**2 for yp, yt in zip(y_pred, y_true))9.2 Optimizers (SGD)

def sgd(params, lr=0.01):

for p in params:

p.data -= lr * p.grad

p.grad = 0.0 # Reset gradients10. Limitations & Future Work

10.1 Nanograd Limitations

- Scalar-Only: No support for tensors or batches.

- No GPU Acceleration: Pure Python implementation.

- Basic Ops: Limited to

+,*, andtanh.

10.2 Extensions

- Tensor Support: Add multi-dimensional arrays.

- Autograd for CNNs: Implement convolution and pooling.

- Just-In-Time (JIT) Compilation: Optimize with Numba or C++.

11. Conclusion

Nanograd demystifies automatic differentiation by implementing reverse-mode AD in ~100 lines of Python. While simplistic, it captures the essence of modern autograd systems. By extending it with tensor operations, GPU support, and more layers, you can build a production-ready deep learning framework.

References

- Karpathy, A. (2020). Micrograd: A tiny scalar-valued autograd engine.

- Baydin, A. G. et al. (2018). Automatic Differentiation in Machine Learning: A Survey.

- Paszke, A. et al. (2017). Automatic differentiation in PyTorch.

Appendices

- A1: Full Nanograd Code with Comments.

- A2: Derivation of Common Activation Gradients (ReLU, Sigmoid).

- A3: Benchmarking Nanograd vs. PyTorch.